Happy Halloween, everyone! We all celebrate Halloween at Open Robotics, no matter where we’re working from!

Happy Halloween, everyone! We all celebrate Halloween at Open Robotics, no matter where we’re working from!

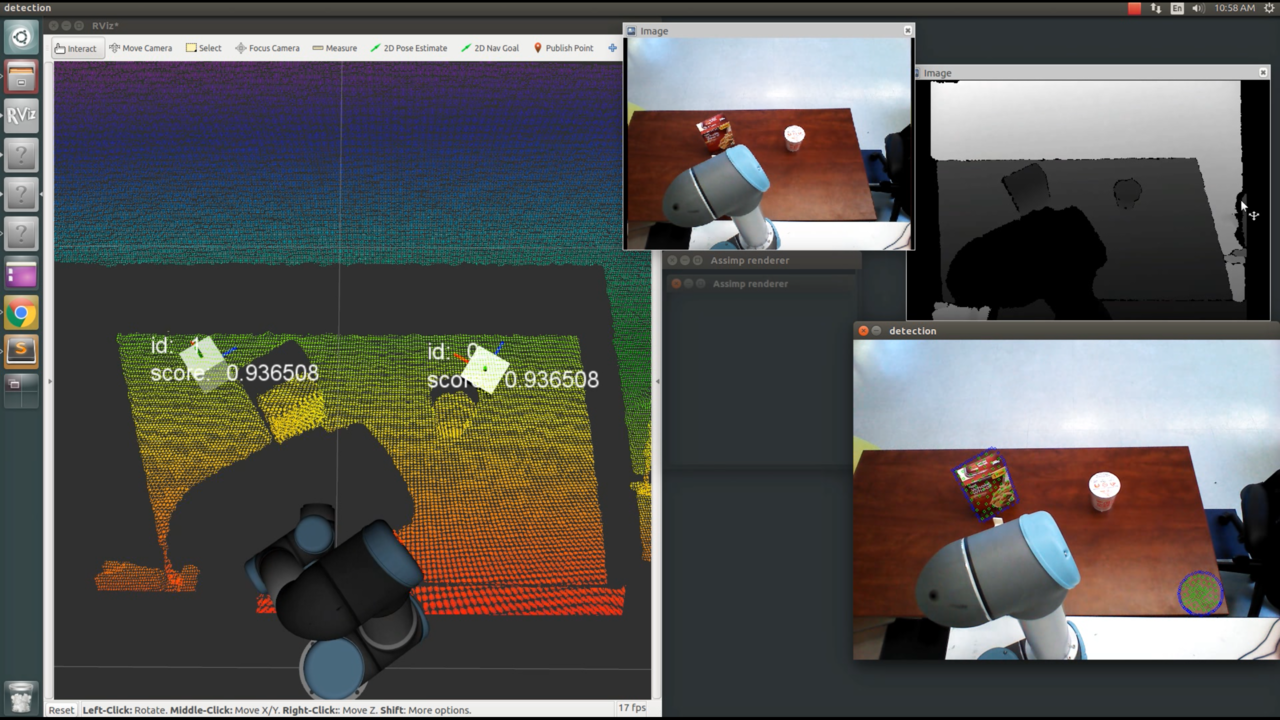

During his internship with Open Robotics, Adam Allevato ported a 3D object detector into ROS 2 and made it run on live depth camera data. The detector leverages ROS 2 features such as intraprocess communication and shared memory to run with lower overhead than the ROS 1 version. Using the ros1_bridge, we can also interface the detector with ROS 1 tools (like Rviz) if desired.

By porting a few other packages for vision and robot motion, we are now able to perform vision-based manipulation using an industrial robot arm 100% ROS 2 code. We like to call this the “picky robot” because it can preferentially push food it doesn’t like off the table and into the trash.

For even more details, attend our upcoming ROSCon 2017 talk, “Using ROS2 for Vision-Based Manipulation with Industrial Robots,”.

The demo is open source and available on Github (still in development). We’re excited to integrate even more use cases and platforms with ROS 2 moving forward!

by Deanna Hood

We are happy to announce the final results of the Agile Robotics for Industrial Automation Competition (ARIAC).

ARIAC is a simulation-based competition designed to promote agility in industrial robot systems by utilizing the latest advances in artificial intelligence and robot planning. The goal is to enable industrial robots on the shop floors to be more productive, more autonomous, and more responsive to the needs of shop floor workers. The virtual nature of the competition enabled participation of teams affiliated with companies and research institutions from across three continents.

While autonomously completing pick-and-place kit assembly tasks, teams were presented with various agility challenges developed based on input from industry representatives. These challenges include failing suction grippers, notification of faulty parts, and reception of high-priority orders that would prompt teams to decide whether or not to reuse existing in-progress kits.

Teams had control over their system’s suite of sensors positioned throughout the workcell, made up of laser scanners, intelligent vision sensors, quality control sensors and interruptible photoelectric break-beams. Each team participating in the finals chose a unique sensor configuration with varying associated costs and impact on the team’s strategy.

The diversity in the teams’ strategies and the impact of their sensor configurations can be seen in the video of highlights from the finals:

Scoring was performed based on a combination of performance, efficiency and cost metrics over 15 trials. The overall standings of the top teams are as follows.

First place: Realization of Robotics Systems, Center for Advanced Manufacturing, University of Southern California

Second place: FIGMENT, Pernambuco Federal Institute of Education, Science, and Technology / Federal University of Pernambuco

Third place: TeamCase, Case Western Reserve University

Top-performing teams will be presenting at IROS 2017 in Vancouver, Canada in a workshop held on Sunday, September 24th. Details for interested parties are available at https://www.nist.gov/el/intelligent-systems-division-73500/agile-robotics-industrial-automation-competition-ariac

The IROS workshop is open to all, even those that did not compete. In addition to having presentations about approaches used in the competition, we will also be exploring plans for future competitions. If you would like to give a presentation about agility challenges you would like to see in future competitions, please contact Craig Schlenoff (craig.schlenoff@nist.gov).

Congratulations to all teams that participated in the competition. We look forward to seeing you in Vancouver!

by Tully Foote

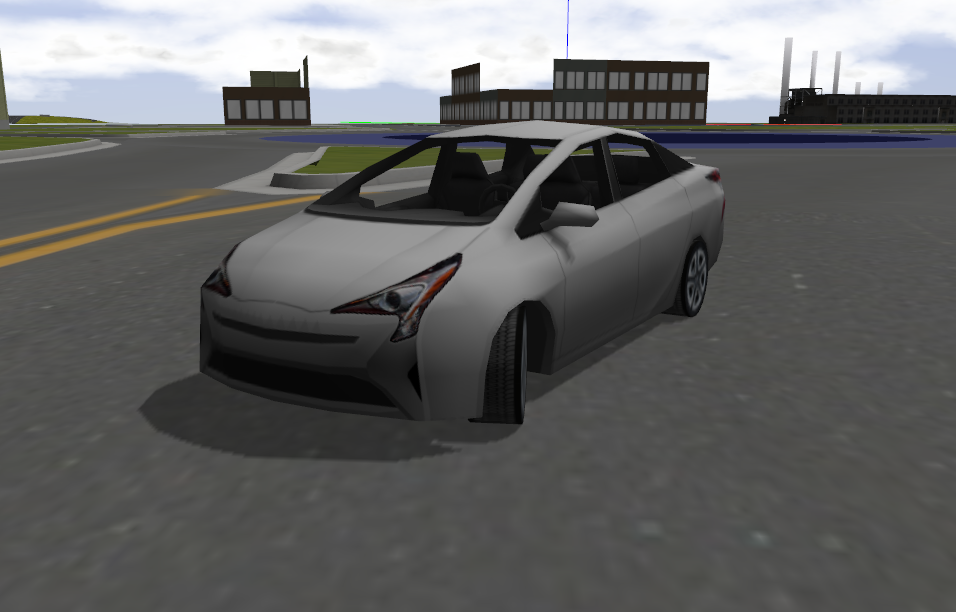

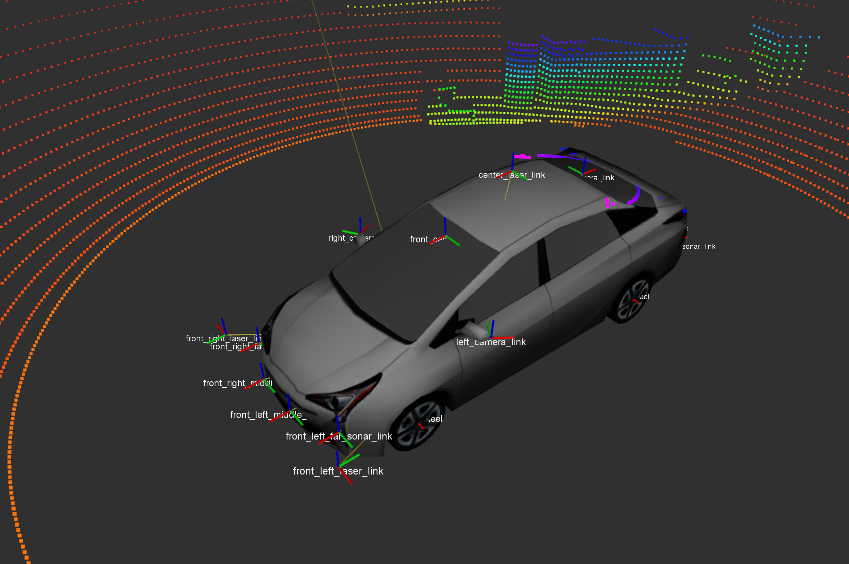

We are excited to show off a simulation of a Prius in Mcity using ROS Kinetic and Gazebo 8. ROS enabled the simulation to be developed faster by using existing software and libraries. The vehicle’s throttle, brake, steering, and transmission are controlled by publishing to a ROS topic. All sensor data is published using ROS, and can be visualized with RViz.

We leveraged Gazebo’s capabilities to incorporate existing models and sensors.

The world contains a new model of Mcity and a freeway interchange. There are also models from the gazebo models repository including dumpsters, traffic cones, and a gas station. On the vehicle itself there is a 16 beam lidar on the roof, 8 ultrasonic sensors, 4 cameras, and 2 planar lidar.

The simulation is open source and available at on GitHub at osrf/car_demo. Try it out by installing nvidia-docker and pulling “osrf/car_demo” from Docker Hub. More information about building and running is available in the README in the source repository.

by caguero

Most of the interns and mentors spent a sunny day in San Francisco together. Our first stop was at Golden Gate park, where we fired up the grill to cook some tasty tri-tip and veggies before exercising our memory playing “Merci”.

We then switched gears at SPiN, a nice bar-restaurant where you can eat, drink, listen music and play ping pong.

Some of us discovered that 8 people can play ping pong on the same table at the same time!

Fun!

by Brian Gerkey

We’ve been working for the past several months with the NASA Ames Intelligent Robotics Group. We’re helping them to model and simulate their forthcoming Resource Prospector (RP) rover, which will be sent to a pole of the moon to drill for water (as ice) and other volatiles. In advance of the mission, they’re using Gazebo to simulate RP to, among other things, support driving experiments with human operators. For these experiments, the visual realism of the camera images that we produce in Gazebo is paramount. So we’re very excited to share this recent result, which shows the view from a simulated camera mounted on the RP model being teleoperated over lunar terrain:

To create the scene shown in the video we made several improvements to the Gazebo rendering component. First, we extended Gazebo’s ability to load and render large a high resolution (8K) DEM model by 1) adding Level-Of-Detail (LOD) support, which made it possible to render large terrains at improved rendering performance; and 2) caching and saving of heightmap data to disk, which significantly reduced the load time of the model. We then added support for using custom shaders with Gazebo heightmaps. The heightmap model in the video uses a high resolution texture map, normal map, bump map, and illumination map, and applies Hapke shading and real time shadows in custom shaders. We also increased the shadow texture size and tweaked a few shadow parameters in an effort to generate sharper shadows. Finally, we post-process the scene by adding some nice looking lens flare effect to the camera.

We’re looking forward to further improving rendering in Gazebo and providing examples of what you can do to get more realistic camera images in your own simulations.

by Brian Gerkey

At our company fun day last month, we used our newly acquired glass mosaic and welding skills to collaboratively create a sign that shows off our new name and logo. And now it’s hanging up in the office:

Congratulations to everybody who contributed to the construction of the sign!

Michael Ferguson spent a year as a software engineer at Willow Garage, helping rewrite the ROS calibration system, among other projects. In 2013, he co-founded Unbounded Robotics, and is currently the CTO of Fetch Robotics. At Fetch, Michael is one of the primary people responsible for making sure that Fetch’s robots reliably fetch things. Mike’s ROSCon talk is about how to effectively use ROS as an integral part of your robotics business, including best practices, potential issues to avoid, and how you should handle open source and intellectual property.

Because of how ROS works, much of your software development (commercial or otherwise) is dependent on many external packages. These packages are constantly being changed for the better — and sometimes for the worse — at unpredictable intervals that are completely out of your control. Using continuous integration, consisting of systems that can handle automated builds, testing, and deployment, can help you catch new problems as early as possible. Michael also shares that a useful way to avoid new problems is to not immediately switch over to new software as soon as they are available: instead, stick with long-term support releases, such as Ubuntu 14.04 and ROS Indigo.

While the foundation of ROS is built on open source, using ROS doesn’t mean that all of the software magic that you create for your robotics company has to be given away for free. ROS supports many different kinds of licenses, some of which your lawyers will be more happy with than others, but there are enough options with enough flexibility that it doesn’t have to be an issue. Using Fetch Robotics as an example, Mike discusses what components of ROS his company uses in their commercial products, including ROS Navigation and MoveIt. With these established packages as a base, Fetch was able to quickly put together operational demos, and then iterate on an operating platform by developing custom plugins optimized for their specific use cases.

When considering how to use ROS as part of your company, it’s important to look closely at the packages you decide to incorporate, to make sure that they have a friendly license, good documentation, recent updates, built-in tests, and a standardized interface. Keeping track of all of this will make your startup life easier in the long run. As long as you’re careful, relying on ROS can make your company more agile, more productive, and ready to make a whole bunch of money off of the future of robotics.

~~~~~~~~~~~~~~~~~~~~

Next up: Ryan Gariepy (Clearpath Robotics)

It’s not sexy, but the next big thing for robots is starting to look like warehouse logistics. The potential market is huge, and a number of startups are developing mobile platforms to automate dull and tedious order fulfillment tasks. Transporting products is just one problem worth solving: picking those products off of shelves is another. Magazino is a German startup that’s developing a robot called Toru that can grasp individual objects off of warehouse shelves, a particularly tricky task that Magazino is tackling with ROS.

Moritz Tenorth is Head of Software Development at Magazino. In his ROSCon talk, Moritz describes Magazino’s Toru as “a mobile pick and place robot that works together with humans in a shared environment,” which is exactly what you’d want in an e-commerce warehouse. The reason that picking is a hard problem, as Moritz explains, is perception coupled with dynamic environments and high uncertainty: if you want a robot that can pick a wide range of objects, it needs to be able to flexibly understand and react to its environment; something that robots are notoriously bad at. ROS is particularly well suited to this, since it’s easy to intelligently integrate as much sensing as you need into your platform.

Magazino’s experience building and deploying their robots has given them a unique perspective on warehouse commercialization with ROS. For example, databases and persistent storage are crucial (as opposed to a focus on runtime), and real-time control turns out to be less important than being able to quickly and easily develop planning algorithms and reducing system complexity. Software components in the ROS ecosystem can vary wildly in quality and upkeep, although ROS-Industrial is working hard to develop code quality metrics. Magazino is also working on remote support and analysis tools, and trying to determine how much communication is required in a multi-robot system, which native ROS isn’t very good at.

Even with those (few) constructive criticisms in mind, Magazino says that ROS is a fantastic way to quickly iterate on both software and hardware in parallel, especially when combined with 3D printed prototypes for testing. Most importantly, Magazino feels comfortable with ROS: it has a familiar workflow, versatile build system, flexible development architecture, robust community that makes hiring a cinch, and it’s still (somehow) easy to use.

Next up: Michael Ferguson (Fetch Robotics)

Clearpath Robotics is best known for building yellow and black robots that are the research platforms you’d build for yourself; that is, if it wasn’t much easier to just get them from Clearpath Robotics. All of their robots run ROS, and Clearpath has been heavily involved in the ROS community for years. Now with Locus Robotics, Tom Moore spent seven months as an autonomy developer at Clearpath. He is the author and maintainer of the robot_localization ROS package, and gave a presentation about it at ROSCon 2015.

robot_localization is a general purpose state estimation package that’s used to give you (and your robot) an accurate sense of where it is and what it’s doing, based on input from as many sensors as you want. The more sensors that you’re able to use for a state estimate, the better that estimate is going to be, especially if you’re dealing with real-worldish things like unreliable GPS or hardware that flakes out on you from time to time. robot_localization has been specifically designed to be able to handle cases like these, in an easy to use and highly customizable way. It has state estimation in 3D space, gives you per-sensor message control, allows for an unlimited number of sensors (just in case you have 42 IMUs and nothing better to do), and more.

Tom’s ROSCon talk takes us through some typical use cases for robot_localization, describes where the package fits in with the ROS navigation stack, explains how to prepare your sensor data, and how to configure estimation nodes for localization. The talk ends with a live(ish) demo, followed by a quick tutorial on how to convert data from your GPS into your robot’s world frame.

The robot_localization package is up to date and very well documented, and you can learn more about it on the ROS Wiki.

Next up: Moritz Tenorth, Ulrich Klank, & Nikolas Engelhard (Magazino GmbH)

Matt Vollrath and Wojciech Ziniew work at an ecommerce consultancy called End Point, where they provide support for Liquid Galaxy; a product that’s almost as cool as it sounds. Originally an open source project begun by Google engineers on their twenty percent time, Liquid Galaxy is a data visualization system consisting of a collection of large vertical displays that wrap around you horizontally. The displays show an immersive (up to 270°) image that’s ideal for data presentations, virtual tours, Google Earth, or anywhere you want a visually engaging environment. Think events, trade shows, offices, museums, galleries, and the like.

Last year, End Point decided to take all of the ad hoc services and protocols that they’d been using to support Liquid Galaxy and move everything over to ROS. The primary reason to do this was ROS support for input devices: you can use just about anything to control a Liquid Galaxy display system, from basic touchscreens to Space Navigator 3D mice to Leap Motions to depth cameras. The modularity of ROS is inherently friendly to all kinds of different hardware.

Check out this week’s ROSCon15 video as Matt and Wojciech take a deep dive into their efforts in bringing ROS to bear for these unique environments.

Next up: Tom Moore (Clearpath Robotics)

ROS already comes with a fantastic built-in visualization tool called rviz, so why would you want to use anything else? At Southwest Research Institute, Jerry Towler explains how they’ve created a new visualization tool called Mapviz that’s specifically designed for the kind of large-scale outdoor environments necessary for autonomous vehicle development. Specifically, Mapviz is able to integrate all of the sensor data that you need on top of a variety of two-dimensional maps, such as road maps or satellite imagery.

As an autonomous vehicle visualization tool, Mapviz works just like you’d expect that it would, which Jerry demonstrated with several demos at ROSCon. Mapviz shows you a top-down view of where your vehicle is, and tracks it across a basemap that seamlessly pulls image tiles at multiple resolutions from a wide variety of local or networked map servers, including Open MapQuest and Bing Maps. Mapviz is, of course, very plugin-friendly. You can add things like stereo disparity feeds, GPS fixes, odometry, grids, pathing data, image overlays, projected laser scans, markers (including textured markers) from most sensor types, and more. It can’t really handle three dimensional data (although it’ll do two-and-a-half dimensions via color gradients), but for interactive tracking of your vehicle’s navigation and path planning behavior, Mapviz should offer most of what you need.

For a variety of non-technical reasons, SwRI hasn’t been able to release all of its tools and plugins as open source quite yet, but they’re working on getting approval as fast as they can. They’re also in the process of developing even more enhancements for Mapviz, and you can keep up to date with the latest version of the software on GitHub.

Next up: Matt Vollrath & Wojciech Ziniewicz (End Point)

BMW has been working on automated driving for the last decade, steadily implementing more advanced features ranging from emergency stop assistance and autonomous highway driving to fully automated valet parking and 360° collision avoidance. Several of these projects were presented at the 2015 Consumer Electronics Show, and as it turns out, the cars were running ROS for both environment detection and planning.

BMW, being BMW, has no problem getting new research hardware. Their latest development platform is the 335I G. This model comes with an advanced driver assistance system based around cameras and radar. The car has been outfitted with four low-profile laser scanners and one long-range radar, but otherwise, it’s pretty close (in terms of hardware) to what’s available in production BMWs.

Why did BMW choose to move from their internally developed software architecture to ROS? Michael explains how ROS’ reputation in the robotics research community prompted his team to give it a try, and they were impressed with its open source nature, distributed architecture, existing selection of software packages, as well as its helpful community. “A large user base means stability and reliability,” Michael says, “because somebody else probably already solved the problem you’re having.” Additionally, using ROS rather than a commercial software platform makes it much easier for BMW to cooperate with universities and research institutions.

Michael discusses the ROS software architecture that BMW is using to do its autonomous car development, and shows how the software interprets the sensor data to identify obstacles and lane markings and do localization and trajectory planning to enable full highway autonomy, based on a combination of lane keeping and dynamic cruise control. BMW also created their own suite of RQT and rviz plugins specifically designed for autonomous vehicle development.

After about two years of experience with ROS, BMW likes a lot of things about it, but Michael and his team do have some constructive criticisms: message transport needs more work (although ROS 2 should help with this), managing configurations for different robots is problematic, and it’s difficult to enforce compliance with industry standards like ISO and AUTOSAR, which will be necessary for software that’s usable in production vehicles.

Next up: Jerry Towler & Marc Alban (SwRI)

While Intel is best known for making computer processors, the company is also interested in how people interact with all of the computing devices that have Intel inside. In other words, Intel makes brains, but they need senses to enable those brains to understand the world around them. Intel has developed two very small and very cheap 3D cameras (one long range and one short range) called RealSense, with the initial intent of putting them into devices like laptops and tablets for applications such as facial recognition and gesture tracking.

Robots are also in dire need of capable and affordable 3D sensors for navigation and object recognition, and fortunately, Intel understands this, and they’ve created the RealSense Robotics Innovation Program to help drive innovation using their hardware. Intel itself isn’t a robotics company, but as Amit explains in his ROSCon talk, they want to be a part of the robotics future, which is why they prioritized ROS integration for their RealSense cameras.

A RealSense ROS package has been available since 2015, and Intel has been listening to feedback from roboticists and steadily adding more features. The package provides access to the RealSense camera data (RGB, depth, IR, and point cloud), and will eventually include basic computer vision functions (including plane analysis and blob detection) as well as more advanced functions like skeleton tracking, object recognition, and localization and mapping tools.

Intel RealSense 3D camera developer kits are available now, and you can order one for as little as $99.

Next up: Michael Aeberhard, Thomas Kühbeck, Bernhard Seidl, et al. (BMW Group Research and Technology)

Check out last week’s post: The Descartes Planning Library for Semi-Constrained Cartesian Trajectories

Descartes is a path planning library that’s designed to solve the problem of planning with semi-constrained trajectories. Semi-constrained means that the degrees of freedom of the path you need to plan are fewer than the degrees of freedom that your robot has. In other words, when planning a path, there are one or more “free” axes that your robot has to work with that can be moved any which way without disrupting the path. This can open up the planning space if you can utilize them creatively, which traditional robots (especially in the industrial space) usually can’t. This results in reduced workspaces and (most dangerous of all) increased reliance on human intuition during the planning process.

Descartes was designed to generate common sense plans, exhibiting similar characteristics to paths planned by a human. It can solve easy problems quickly, and difficult problems eventually, integrating hybrid trajectories and dynamic replanning. It’s easy to use, with a GUI that allows you to quickly set anchor points that the robot replans around, with visual confirmation of the new path. The second half of Shaun’s ROSCon talk is an in-depth explanation of Descartes’ interfaces and implementations intended for path planning fans (you know who you are).

As with many (if not most) of the projects being presented at ROSCon, Descartes is open source, and all of the development is public. If you’d like to try it out, the current stable release runs on ROS Hydro, and a tutorial is available on the ROS Wiki to help you get started.

Next up: Amit Moran & Gila Kamhi (Intel)

Check out last week’s post: Phobos — Robot Model Development on Steroids

by Tully Foote

To model a robot in rviz, you first need to create what’s called a Unified Robot Description Format (URDF) file, which is an XML-formatted text file that represents the physical configuration of your robot. Fundamentally, it’s not that hard to create a URDF file, but for complex robots, these files tend to be enormously complicated and very tedious to put together. At the University of Bremen, Kai von Szadkowski was tasked with developing a URDF model for a 60 degrees of freedom robot called MANTIS (Multi-legged Manipulation and Locomotion System). Kai got a bit fed up with the process and developed a better way of doing it, called Phobos.

http://robotik.dfki-bremen.de/en/research/robot-systems/mantis.html

Phobos is an add-on for a piece of free and open-source 3D modeling and rendering software called Blender. Using Blender, you can create armatures, which are essentially kinematic skeletons that you can use to animate a 3D character. As it turns out, there are some convenient parallels between URDF models and 3D models in Blender: the links and joints in a URDF file equate to armatures and bones in Blender, and both use similar hierarchical structures to describe their models. Phobos adds a new toolbar to Blender that makes it easy to edit these models by adding links, motors, sensors, and collision geometries. You can also leverage Blender’s Python scripting environment to automate as much of the process as you’d like. Additionally, Phobos comes with a sort of “robot dictionary” in Python that manages all of the exporting to URDF for you.

Since the native URDF format can’t handle all of the information that can be incorporated into your model in Blender, Kai proposes an extended version of URDF called SMURF (Supplemental Mostly Universal Robot Format) that adds YAML files to a URDF, supporting annotations for sensor, motors, and anything else you’d like to include.

If any of this sounds good to you, it’s easy to try it out: Blender is available for free, and Phobos can be found on GitHub.

The ROS Industrial Consortium was established four years ago as a partnership between Yaskawa Motoman Robotics, Southwest Research Institute (SwRI), Willow Garage, and Fraunhofer IPA. The idea was to provide a ROS-based open-source framework for robotics applications, designed to make it easy (or at least possible) to leverage advanced ROS capabilities (like perception and planning) in industrial environments. Basically, ROS-I adds models, libraries, drivers, and packages to ROS that are specifically designed for manufacturing automation, with a focus on code quality and end user reliability.

Mirko Bordignon from Fraunhofer IPA opened the final ROSCon 2016 keynote by pointing out that ROS is still heavily focused on research and service robotics. This isn’t a bad thing, but with a little help, there’s an enormous opportunity for ROS to transform industrial robotics as well. Over the past few years. The ROS Industrial Consortium has grown into two international consortia (one in America and one in Europe), comprising over thirty members that provide financial and managerial support to the ROS-I community.

To help companies get more comfortable with the idea of using ROS in their robots, ROS-I holds frequent training sessions and other outreach events. “People out there are realizing that at least they can’t ignore ROS, and that they actually might benefit from it,” Bordignon says. And companies are benefiting from it, with ROS starting to show up in a variety of different industries in the form of factory floor deployments as well as products.

Bordignon highlights a few of the most interesting projects that the ROS-I community is working on at the moment, including a CAD to ROS workbench, getting ROS to work on PLCs, and integrating the OPC data protocol, which is common to many industrial systems.

Before going into deeper detail on ROS-I’s projects, Shaun Edwards from SwRI talks about how the fundamental idea for a ROS-I consortium goes back to one of their first demos. The demo was of a PR2 using 3D perception and intelligent path planning to pick up objects off of a table. “[Companies were] impressed by what they saw at Willow Garage, but they didn’t make the connection: that they could leverage that work,” Edwards explains. SwRI then partnered with Yaskawa to get the same software running on an industrial arm, “and this alone really sold industry on ROS being something to pay attention to,” says Edwards.

Since 2014, ROS-I has been refining a general purpose Calibration Toolbox for industrial robots. The goal is to streamline an otherwise time-consuming (and annoying) calibration process. This toolbox covers robot-to-camera calibration (with both stationary and mobile cameras), as well as camera-to-camera calibration. Over the next few months, ROS-I will be releasing templates for common calibration use cases to make it as easy as possible.

Path planning is another ongoing ROS-I project, as is ROS support for CANOpen devices (to enable IoT-type networking), and integrated motion planning for mobile manipulators. ROS-I actually paid the developers of the ROS mobile manipulation stack to help with this. “Leveraging the community this way, and even paying the community, is a really good thing, and I’d like to see more of it,” Edwards says.

To close things out, Edwards briefly touches on the future of ROS-I, including the seamless fusion of 3D scanning, intelligent planning, and dynamic manipulation, which is already being sponsored by Boeing and Caterpillar. If you’d like to get involved in ROS-I, they’d love for you to join them, and even if you’re not directly interested in industrial robotics, there are still plenty of opportunities to be part of a more inclusive and collaborative ROS ecosystem.

Next up: Kai von Szadkowski (University of Bremen)

Check out last week’s post: MoveIt! Strengths, Weaknesses, and Developer Insight

by Tully Foote

Dave Coleman has worked in (almost) every robotics lab there is: Willow Garage, JSK Humanoids Lab in Tokyo, Google, UC Boulder, and (of course) OSRF. He’s also the owner of PickNik, a ROS consultancy that specializes in training robots to destructively put packages of Oreo cookies on shelves. Dave has been working on MoveIt! since before it was first released, and to kick off the second day of ROSCon, he gave a keynote to share everything he knows about motion planning in ROS.

MoveIt! is a flexible and robot agnostic motion planning framework that integrates manipulation, 3D perception, kinematics, control, and navigation. It’s a collaboration between lots of people across many different organizations, and is the third most popular ROS package with a fast-growing community of contributors. It’s simple to set up and use, and for beginners, a plugin lets you easily move your robot around in Rviz.

As a MoveIt! pro, Dave offers a series of pro tips on how to get the most out of your motion planner. For example, he suggests that researchers try using C++ classes individually to avoid getting buried in a bunch of layered services and actions. This makes it easier to figure out why your code doesn’t work. Dave also describes his experience in the Amazon Picking Challenge, held last year at ICRA in Seattle.

MoveIt! is great, but there’s still a lot of potential for improvement. Dave discusses some of the things that he’d like to see, including better reliability (and more communicative failures), grasping support, and, as always, more documentation and better tutorials. A recent MoveIt! community meeting resulted in a future roadmap that focuses on better humanoid kinematic support and support for other types of planners, as well as integrated visual servoing and easy access to calibration packages.

Dave ends with a reminder that progress is important, even if it’s often at odds with stability. Breaking changes are sometimes necessary in order to add valuable features to the code. As with much of ROS, MoveIt! depends on the ROS community to keep it capable and relevant. If you’re an expert in one of the components that makes MoveIt! so useful, you should definitely consider contributing back with a plug-in from which others can take advantage.

Next up: Mirko Bordignon (Fraunhofer IPA), Shaun Edwards (SwRI), Clay Flannigan (SwRI), et al.

Check out last week’s post: Real-time Performance in ROS 2

Jackie Kay was upgraded from OSRF intern to full-time software engineer in 2014. Her background includes robotics education and path planning for autonomous lunar rovers. More recently, she’s been working on bringing real-time computing to ROS 2.

Real-time computing isn’t about computing at a certain speed— it’s about computing on schedule. It means that your system can return data reliably and on time, in situations where responding late is usually bad thing; and sometimes a really bad thing. Hard real-time computing is important in safety critical applications (like nuclear reactors, spacecraft, and autonomous vehicles), when taking too long thinking about something could result in a figurative or literal crash — or both. Soft real-time computing is a bit more forgiving, in that things running behind have a cost, but the data are still usable, as with packets arriving out of order while streaming video. And in between there’s firm real-time computing, where missing deadlines is definitely bad but nothing explodes (or things only explode a little bit), like on a robotic assembly line.

Making a system that’s adaptable and reliable, especially in the context of commercialization, often requires real-time computing, and this is why integrating real-time compatibility is one of the primary goals of ROS 2. Jackie’s keynote addresses many of the technical details underlying the ROS 2 real-time approach, including scheduling, memory management, node design, and communications strategies. To illustrate the improvements that ROS 2 has over ROS, Jackie shares benchmarking results of a ROS 2 demo running in real-time, showing that even under stress, implementing a high performance soft real-time system in ROS 2 looks promising.

To try real-time computing in ROS 2 for yourself, you can download an Alpha release and play around with a demo here: https://github.com/ros2/ros2/wiki/Real-Time-Programming

ROSCon 2015 Hamburg: Day 1 – Jackie Kay: Real-time Performance in ROS 2 from OSRF on Vimeo.

Next up: Dave Coleman (University of Colorado Boulder)

Check out last week’s post: State of ROS 2

ROS has been an enormously important resource for the robotics community. It turned eight years old at the end of 2015, and is currently on its ninth official release. As ROS adoption has skyrocketed (especially over the past several years), OSRF, together with the community, have identified many specific areas of the operating system that need major overhauls in order to keep pace with maturing user demand. Dirk Thomas, Esteve Fernandez, and William Woodall from OSRF gave a preview at ROSCon 2015 of what to expect in ROS 2, including multi-robot systems, commercial deployments, microprocessor compatibility, real time control, and additional platform support.

The OSRF team shows off many of the exciting new ROS 2 features in this demo-heavy talk, including distributed message passing through DDS (no ROS master required), performance boosts for communications within nodes, quality of service improvements, and ways of bridging ROS 1 and ROS 2 so that you don’t have to make the leap all at once. If you’d like to make the leap all at once anyway, the Alpha 1 release of ROS 2 has been available since last September, and Thomas ends the talk with a brief overview of the roadmap leading up to ROS 2’s Alpha 2 release. As of April 2016, ROS 2 is on release Alpha 5 (“Epoxy”), and you can keep up-to-date on the roadmap and release schedule here.

ROSCon 2015 Hamburg: Day 1 – Dirk Thomas: State of ROS 2 – demos and the technology behind from OSRF on Vimeo.

Next up: Jackie Kay (OSRF) & Adolfo Rodríguez Tsouroukdissian (PAL Robotics)

Check out last week’s post: Lightning Talk highlights

OSRF Software Engineer Louise Poubel was recently asked to write an article about her upcoming presentation at the IEEE Women in Engineering International Leadership Conference on open source robotics for Scientific Computing magazine. Check out the excerpt, below.

These are exciting times for robotics. In the past few years, we’ve started seeing robotic products make their way into our daily lives. We’re buying robotic vacuum cleaners and toy quadcopters. If you’re lucky enough, you’ve seen a robot dancing at a presentation, had a robot give you information at a store, or received a robot delivery at a hotel. Things that seemed like science fiction just a few years ago are looking like they could become reality in our lifetimes. We can more clearly see a future with package delivery drones and self-driving cars.

From ros.org:

Today may be April 1st, but this is no April Fools’ Joke: ROSCon 2016 will take place in Seoul, South Korea between October 8th and 9th! We’re very excited to get the ROS community together again to share all of the exciting work that has happened over the last year. ROSCon will directly precede IROS, which is in nearby Daejeon, Korea this year. If you’re already planning to attend IROS, just tack on a couple extra days and join us in Seoul!

Stay tuned to the ROSCon 2016 website for updates and submission deadlines. We look forward to seeing you in Seoul later this year!

The growing popularity of ROSCon means that it’s not always possible to schedule presentations for everyone that wants to give one. In addition, many people have projects that they’d like to share, but don’t need a full twenty minutes to present. That’s why forty minutes of each day at ROSCon are set aside for any attendee to present anything they want; all in a heartlessly rigid three-minutes-or-less format. Here are a few highlights:

Victor is the CTO and co-founder of Erle Robotics. The Erle-Brain 2 is an open source, open hardware controller for robots based on the Raspberry Pi 2. It runs ROS, will support ROS 2, and can be used as the brain for all kinds of different robots, including the Erle Spider, a slightly misnamed hexapod that you can buy for €599.

Andreas works on robot-assisted surgery using ROS at Karlsruhe Institute of Technology. KIT has a futuristic operating room full of robots and sensors designed to help human doctors and nurses through positional tracking, augmented reality, and direct robotic assistance. Andreas is also interested in collaborating with people on ROS Medical, which doesn’t exist yet but has a really cool logo anyway.

Through the efforts of Jochen Sprickerhof and Leopold Avellaneda, there are now ROS packages available upstream in Debian unstable and Ubuntu Xenial that can be installed from the main Debian and Ubuntu repositories. The original ROS packages have been modified to follow Debian guidelines, which includes splitting packages into multiple pieces, changing names in some cases, installing to /usr according to FHS guidelines, and using soversions on shared libraries.

ROSCon 2015 Hamburg: Day 1 – Lightning Talks from OSRF on Vimeo.

Next up: Dirk Thomas, William Woodall (OSRF) & Esteve Fernandez

Check out last week’s post: Ralph Seulin of CNRS

The first step in doing something new, useful, and exciting with ROS is — without exception — learning how to use ROS. Ralph Seulin is part of CNRS in France, which, along with universities in Spain and Scotland, collaboratively offer a masters course in robotics and computer vision that includes a focus on ROS. Over four semesters, between 30 and 40 students go through the program. In this talk, Seulin discusses how ROS is taught to these students, as well as what kinds of research they leverage that knowledge into.

Before Seulin’s group could effectively teach ROS to students, they had to learn ROS for themselves. This was a little bit more difficult way back in 2013 than it is now, but they took advantage of the ROS Wiki , read all the books on ROS they could get ahold of, and of course made sure to attend ROSCon. From there, Seulin developed a series of tutorials for his students, starting with simulations and ending up with practical programming in ROS on the TurtleBot 2. Ultimately, students spend 250 hours on a custom robotics project that integrates motion control, navigation and localization, and computer vision tasks.

Seulin also makes use of ROS in application development. One of those applications is in precision vineyard agriculture because, as Seulin explains, “we come from Burgundy.” Using lasers mounted on a tractor to collect and classify 3D data, a prototype robot tractor can be used to analyze vineyard canopies and estimate leaf density. With this information, vineyards can dynamically adjust the application of agricultural chemicals, using just the right amount and only where necessary. Better for plants, better for humans, thanks to ROS.

ROSCon 2015 Hamburg: Day 1 – Ralph Seulin: ROS for education and applied research: practical experiences from OSRF on Vimeo.

Next up: Dirk Thomas, William Woodall (OSRF) & Esteve Fernandez

Check out last week’s post: Daniel Di Marco of Bosch

Daniel Di Marco is part of Deepfield Robotics, a 20 person agricultural robotics startup within Bosch. Deepfield makes robots that can, among other things, visually locate and then brutally destroy weeds by pounding them into the dirt. In order to deliver software to their customers, Deepfield decided to create its own build farm, and Di Marco’s ROSCon presentation explains why managing a build farm internally is a good idea for a startup.

A build farm is a system that can automatically create Debian packages for you, while running integrated unit tests and generating documentation. OSRF already supports all of ROS with its own build farm, so why would anyone want to set up a build farm for themselves instead? Simple, says Di Marco: it’s something you should do if you actually want to make money with your robots.

If ROS is a part of your thriving robotics business, running a build farm allows you to do several important things. First, since you’re hosting your code on your own servers, you can maintain control over it, protecting your intellectual property and any proprietary components that you may be using. Second, you can use your build farm to distribute your packages directly to your customers, who are (presumably) paying you, and not to just anybody who swings by and wants to snag them. And lastly, you can decide what versions of different packages you want to keep using, rather than being subjected to upgrades that may not work as well.

Di Marco concludes by discussing why Docker is an easy and reliable foundation for a build farm, and how to get it set up. Most of the process has been scripted, thanks to some hard work at OSRF, and Di Marco walks us through an initial deployment to help you get your own build farm up and running.

ROSCon 2015 Hamburg: Day 1 – Daniel Di Marco: Docker-based ROS Build Farm from OSRF on Vimeo.

Next up: Ralph Seulin, Raphael Duverne, and Olivier Morel (CNRS – Univ. Bourgogne Franche-Comte)

Check out last week’s post: Ruffin White of Georgia Tech

by Tully Foote

During her Outreachy internship at Open Source Robotics Foundation, Nadya Ampilogova worked on a TurtleBot User Experience project.

The goal was to extend learn.turtlebot.com with lessons taking advantage of the simulation environment available in Gazebo. The use of robot simulation instead of a physical robot makes the tutorials accessible to a larger audience. All examples are with TurtleBot because it is a common way to start learning robotics. Many universities use the TurtleBot when teaching introductory robotics courses. In creating the lessons, Nadya focused on making the content engaging and accessible by integrating images and videos. The topics include how to install software, setup tools, write your first program to control a TurtleBot and lots more. Upon completion of this tutorial you will be able to create a TurtleBot application and test it in simulation.

The result of the project is twenty lessons about TurtleBot in simulation. They cover not only basic features but also give a brief overview of more complicated subjects. This tutorial makes studying robotics easier for people who may not have access to a real robot all of the time but who have a computer that can run the simulator.

You can find the tutorial on learn.turtlebot.com. This internship was a part of the Outreachy Program. You can read more about Nadya’s internship on her blog.

by caguero

Do you want to spend the summer coding on Gazebo or ROS? OSRF has been accepted for GSoC and we are looking for talented students who want to participate as remote interns.

Accepted students will participate in real-world software development,

contributing to robotics projects and engaging with the global robotics community, all while

getting paid.

Check out our GSoC site and don’t forget to visit our ideas page, which lists projects that we’re interested in. Feel free to ask questions and propose suggestions at gsoc@localhost. The student application period starts March 14th.

Get ready for a robotics coding summer!

Every day, roboticists write code that helps their robots do amazing new things. And every day, this code remains virtually useless to anyone else, because outside distribution is such a hassle. This isn’t a problem that’s unique to robotics: distributing software in general is tricky to do reliably and well, since you’re never sure what kind of system your end user has. Robotics magnifies this challenge because of the unpredictably exotic mix of hardware and software that makes up a given robot doing a given thing at a given time, combined with the extraordinarily rapid pace of advancement. Given all of this complexity, how do you make code that’s portable and robust and useful to as many people as possible?

Ruffin White, a graduate research assistant at the Institute for Robotics and Intelligent Machines at Georgia Tech (and ex-OSRF intern), discusses how Linux containers can help manage some of these issues. A Linux container is a software package that lives somewhere in between a complete virtual machine and bare code. Using a service called Docker, you can wrap up code, distros, libraries, drivers, and all the other dependencies necessary for your code to function into portable containers that still use the underlying kernel on whatever they end up getting installed on. This allows the container to be very lightweight, while also providing adaptability to local infrastructure.

White gives three different demonstrations of how Docker containers can come in handy for robots in a ROS environment. In the first, he shows how in an educational context, you can use containers to provide a preset environment that can be safely experimented in without any risk. Second, for researchers, containers can allow you to easily test different algorithms, provides a way to publish code in a repeatable and reproducible way, and makes collaborative research much simpler. And finally, industry can take advantage of containers to deploy multiple nodes on different cloud services, or to manage entire swarms of robots at once.

If you want to give ROS a try inside Docker containers, the offical repo can be found at https://hub.docker.com/_/ros/.

ROSCon 2015 Hamburg: Day 1 – Ruffin White: ROS + Docker from OSRF on Vimeo.

Next up: Dejan Pangercic, Daniel Di Marco, and Arne Hamann (Robert Bosch)

Check out last week’s post: Morgan Quigley of OSRF

Morgan Quigley is first author of the authoritative 2009 workshop paper on the Robot Operating System. He’s been Chief Architect at OSRF since 2012, and in 2013, MIT Tech Review awarded Quigley a prestigious TR35 award. In addition to software development, Quigley knows a thing or two about hardware: he helped Sandia National Labs design high-efficiency bipeds for DARPA, and he also gave Sandia a hand with the development of their sensor-rich, high-DOF robotic hand.

Quigley’s ROSCon talk is focused on small (but not tiny) microcontrollers: 32-bit MCUs running at a few hundred megahertz or so, with USB and Ethernet connections. While these types of processors can’t power smartphones or run Linux, they are found in many popular embedded systems, such as the Pixhawk PX4 autopilot. Microcontrollers like these would be much easier to integrate if they all operated under a standardized communication protocol, but there are enough inconvenient hoops that have to be jumped through to run ROS on them that it’s usually not worth the hassle.

ROS 2, which doesn’t rely on a master node and has native UDP message passing, promises to work much better than ROS on distributed embedded systems. To make ROS 2 fit on a small microcontroller, Quigley demonstrates a few applications of FreeRTPS, a portable, embedded-friendly implementation of the network protocol underlying ROS 2.

After showing the impressive results of some torture tests on a Discovery board, Quigley talks about what’s coming next, including a focus on even smaller microcontrollers (like Arduino boards that communicate over USB rather than Ethernet). Eventually, Quigley suggests that ROS 2 will be small and light enough to run on the microcontrollers inside sensors and actuators themselves, simplifying real-time control.

ROSCon 2015 Hamburg: Day 1 – Morgan Quigley: ROS 2 on “small” embedded systems from OSRF on Vimeo.

Next up: Ruffin White of Institute for Robotics & Intelligent Machines at Georgia Tech

Check out last week’s post: Roman Bapst of ETH Zurich and PX4

PX4 is a flight control software stack for autonomous aerial robots that describes itself as “rocket science straight from the best labs, powering anything from racing to cargo drones.” One of these labs is at ETH Zurich, where Roman Bapst serves on the faculty. Bapst works on computer vision and actively contributes to the PX4 autopilot platform.

Bapst starts out by describing some of the great things about the PX4 autopilot: it’s open source, open hardware, and supported by the Linux Foundation’s Dronecode Project, which provides a framework under which developers can contribute to an open source standard platform for drones. PX4 runs on 3DRobotics’ Pixhawk hardware, and once you hook up some sensors and servos, it will autonomously pilot nearly anything that flies – from conventional winged aircraft to multicopters to hybrids.

One of PX4’s unique features is its modularity, which is fundamentally very similar in structure to ROS. This means that you can run PX4 modules as ROS nodes, while taking advantage of other ROS packages under PX4 to do things like vision based navigation and control. Additionally, it lets you easily simulate PX4-based drones within Gazebo, which, unlike real life, has a free reset button that you can push after a crash.

The PX4 team is currently getting their software modules running as ROS nodes on Qualcomm’s Snapdragon Flight drone development platform, which would be a very capable and (affordable) way of getting started with a custom autonomous drone.

ROSCon 2015 Hamburg: Day 1 – Roman Bapst: ROS on DroneCode Systems from OSRF on Vimeo.

Next up: Morgan Quigley of OSRF

Check out last week’s post: Gary Servín of Ekumen

What’s ROS android_ndk? Why should you care? How does it work? Gary Servín is with Ekumen, an engineering and software consulting company based in Buenos Aires, Argentina that specializes in ROS, web and Android development. With the backing of Qualcomm and OSRF, Ekumen has been trying to make it both possible and easy to run ROS applications on small Android devices like tablets and cellphones using Android’s native language development kit.

As Gary explains, the increasing performance, decreasing cost, and overall ubiquity of Android devices make them ideal brains for robots. The tricky part is getting ROS packages to play nice with Android, which is where ROS android_ndk comes in: it’s a set of scripts that Ekumen is working on to make the process much easier. Unlike rosjava, ROS android_ndk gives you access to 181 packages from the desktop variant of ROS, with the ability to run native ROS nodes directly.

Ekumen is actively working on this project, with plans to incorporate wrappers for rosjava, actionlib implementation, and support for ROS 2. In the meantime, there’s already a set of tutorials on ROS.org that should help you get started.

ROSCon 2015 Hamburg: Day 1 – Gary Servin: ROS android_ndk: What? Why? How? from OSRF on Vimeo.

Next up: Lorenz Meier & Roman Bapst of ETH Zurich and PX4

Check out last week’s post: Stefan Kohlbrecher of Technische Universitaet Darmstadt

Stefan’s early research using tiny soccer-playing humanoid robots at Technische Universität Darmstadt in Germany prepared him well for software development on much larger humanoid robots that don’t play soccer at all. From the Darmstadt Dribblers RoboCup team to the DARPA Robotics Challenge Team ViGIR, Stefan has years of experience with robots that need to walk on two legs and do things while not falling over (much).

Almost all of the software that Team ViGIR used to control its ATLAS robot (named Florian) was ROS based. Stefan credits both the team’s prior experience with ROS, ROS’s existing software base, and its vibrant community for why Team ViGIR made the choice to go with ROS from the very beginning. Controlling the ATLAS robot is exceedingly complex, and Stefan takes us through the software infrastructure that Team ViGIR used during the DRC; from basic perception to motion planning to manipulation and user interfaces.

With lots of pictures and behind-the-scenes videos, Stefan describes how Team ViGIR planned to tackle the challenging DRC Finals course. The team used both high-level autonomy and low-level control, with an emphasis on dynamic, flexible collaboration between robot and operator. Stefan narrates footage of both of Florian’s runs at the DRC Finals; each was eventful, but we won’t spoil it for you.

To wrap up his talk, Stefan describes some of the lessons that Team ViGIR learned through their DRC experience: about ROS, about ridiculously complex humanoid robots, and about participating in a global robotics competition.

ROSCon 2015 Hamburg: Day 1 – Stefan Kohlbrecher: An Introduction to Team ViGIR’s Open Source Software and DRC Post Mortem from OSRF on Vimeo.

Next up: Gary Servin of Creativa77

Check out last week’s post: Mark Shuttleworth of Canonical

In 2004, Canonical released the first version of Ubuntu, a Debian-based open source Linux OS that provides one of the main operational foundations of ROS. Canonical’s founder, Mark Shuttleworth, was CEO of the company until 2009, when he transitioned to a leadership role that lets him focus more on product design and partnerships. In 2002, Mark spent eight days aboard the International Space Station, but that was before the ISS was home to a ROS-powered robot. He currently lives on the Isle of Man with 18 ducks and an occasional sheep. Ubuntu was a platinum co-sponsor of ROSCon 2015, and Mark gave the opening keynote on developing a business in the robot age.

Changes in society and business are both driven by changes in technology, Mark says, encouraging those developing technologies to consider the larger consequences that their work will have, and how those consequences will result in more opportunities. Shuttleworth suggests that robotics developers really need two things at this point: a robust Internet of Things infrastructure, followed by the addition of dynamic mobility that robots represent. However, software is a much more realistic business proposition for a robotics startup, especially if you leverage open source to create a developer community around your product and let others innovate through what you’ve built.

To illustrate this principle, Mark shows a live demo of a hexapod called Erle-Spider, along with a robust, high-level ‘meta’ build and packaging tool called Snapcraft. Snapcraft makes it easy for users to install software and for developers to structure and distribute it without having to worry about conflicts or inter-app security. The immediate future promises opportunities for robotics in entertainment and education, Mark says, especially if hardware, ROS, and an app-like economy can come together to give developers easy, reliable ways to bring their creations to market.

ROSCon 2015 Hamburg: Day 1 – Mark Shuttleworth: Commercial models for the robot generation from OSRF on Vimeo.

Next up: Stefan Kohlbrecher of Technische Universitaet Darmstadt

Check out last week’s post: OSRF’s Brian Gerkey

ROSCon is an annual conference focused on ROS, the Robot Operating System. Every year, hundreds of ROS developers of all skill levels and backgrounds, from industry to academia, come together to teach, learn, and show off their latest projects. ROSCon 2015 was held in Hamburg, Germany. Beginning today and each week thereafter, we’ll be highlighting one of the talks presented at ROSCon 2015.

Brian Gerkey is the CEO of the Open Source Robotics Foundation, which oversees core ROS development and helps to coordinate the efforts of the ROS community. Brian helped found OSRF in 2012, after directing open source development at Willow Garage.

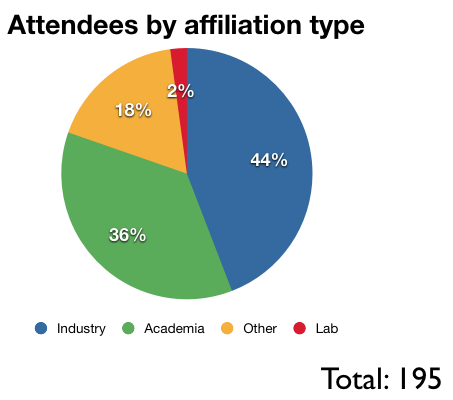

Unless you’d like to re-live the ROSCon Logistics Experience, you can skip to 5:10 in Brian’s opening remarks, where he provides an overview of ROSCon attendees and ROS user metrics that shows how diverse the ROS community has become. Brian touches on what’s happened with ROS over the last year, along with the future of ROS and OSRF, and what we have to look forward to in 2016. Brian also touches on DARPA’s Robotics Fast Track program, which has a submission deadline of January 31, 2016.

ROSCon 2015 Hamburg: Day 1 – Opening Remarks from OSRF on Vimeo.

Next up, Mark Shuttleworth from Canonical.

OSRF CEO, Brian Gerkey, was recently asked to contribute his thoughts as part of O’Reilly Media’s recent Next: Economy Summit. Called “WTF”, the event tackled the issues of how work, business and society face massive technology-driven change. His contribution was entitled: “Looking Forward to the Robot Economy”. Check out the excerpt, below.

Let’s talk about robots. In the near future, robots will learn how to do more and more of the things that we humans are used to doing ourselves. Watching the progress of robotic technology over the last several years has caused a wild and so far mostly theoretical economic panic about the future of humans in the workplace. But despite the flood of articles suggesting that the human worker is doomed, robots are almost certainly not going to take your job anytime soon.

Open Source Robotics Foundation is joining DARPA and Tandem National Security Innovations on the road to spread the word about DARPA’s Robotics Fast Track Program. We’re proud to support the opportunity for startups and roboticists to receive $150,000 to build a robot prototype.

Join us in Seattle, Redwood City, Livermore, San Diego (or all four!) to learn more about the innovative program designed to make it easier to get projects funded in weeks, not months or years by the Pentagon’s leading advanced research lab. Whether you’re a roboticist, technologist, or entrepreneur, you’ll want to join us for this rare opportunity. The events will begin with an informational session to discuss the program followed by the opportunity for attendees to meet privately with representatives from DARPA, OSRF and TandemNSI to ask specific questions about individual projects. Food and drink will be provided at each of our free events so come out and join us.

Date/Time: Nov. 17, 2015 from 6:30 p.m. to 8:30 p.m.

Location: The Maker’s Space (92 Lenora Street, Seattle, WA 98121)

Register Here

Lunch in Livermore

Date/Time: Nov. 18, 2015 from 11:30 a.m. to 1 p.m.

Location: i-GATE Innovation Hub (2324 Second St, Livermore, CA 94550)

Register Here

Evening in Redwood City

Date/Time: Nov. 18, 2015 from 6:30 p.m. to 8:30 p.m.

Location: TechShop Mid Peninsula (2415 Bay Road, Redwood City, CA 94063)

Register Here

Date/Time: Nov. 19, 2015 from 6:30 p.m. to 8:30 p.m.

Location: The Basement — UC San Diego (9500 Gilman Dr., La Jolla, CA 92093)

Register Here

To learn even more about the program, the Robotics Fast Track website has specifics on how to apply, and more details on the technologies that DARPA seeks. We look forward to seeing you at one of the West Coast events!

Happy Halloween from OSRF! Our commitment to wearing costumes at work is as strong as our commitment to open source software!

This year’s ROSCon is nearly upon us. If you haven’t yet made plans to get yourself to Hamburg, then you are out of luck as this year’s ROSCon has sold out. The good news is that we are live streaming ROSCon presentations free of charge, courtesy of Qualcomm.

Click here for the live stream beginning 9:00 a.m. CEST, 2015, 12:00 a.m. PDT or 3:00 a.m. EDT on October 3, 2015.

All sessions will also be recorded and made available for viewing in the near future. Follow @OSRFoundation for announcements about their availability.

A final thank you to Platinum Sponsors Fetch Robotics and Ubuntu; Gold Sponsors 3D Robotics, Bosch, Clearpath Robotics, GaiTech, Magazino, NVIDIA, Qualcomm, Rethink Robotics, Robotis, Robotnik, ROS-Industrial, Shadow Robot Company, SICK and Synapticon; and Silver Sponsors Erle Robotics and Northwestern University, McCormick School of Engineering.

Check out the program here.

If you’re attending in-person, we look forward to seeing you soon!

by Ian Chen

During his internship with OSRF, Mike Kasper developed a new ignition-robotics rendering library. The key feature of this library is that it provides an abstract render-engine interface for building and rendering scenes. This allows the library to employ multiple underlying render engines. The motivation for this work was to extend Gazebo’s rendering capabilities to provide near photo-realistic imagery for simulated camera sensors. This could then be utilized for the development and testing of perceptions algorithms.

As Gazebo currently employs the Object-Oriented Graphics Rendering Engine (OGRE), an OGRE-based implementation has been added to the ignition-rendering library. Additionally, a render-engine using NVIDIA’s OptiX ray-tracing engine has also been implemented. The current OptiX-based render-engine employs simple ray-tracing techniques, but will employ physically based path-tracing techniques in the future to generate photo-realistic imagery.

The following videos give an overview of the libraries’ current capabilities:

by Brian Gerkey

OSRF has been intimately involved in the DARPA Robotics Challenge (DRC) from the beginning in June 2012, when we started getting Gazebo into shape to meet the simulation needs of DRC teams, including hosting the Virtual Robotics Challenge (VRC) in June 2013. So the DRC Finals this past weekend was a special event for us, representing three years of work from our team.

We were especially excited to see widespread use of both ROS and Gazebo during the two-day competition. Walking through the team garage area during the finals, we saw many screens showing rviz and other ROS tools, and even one with a browser open to ROS Answers (some last-minute debugging, we assume).

We talked with several teams who used Gazebo in their software development and testing, including teams using robots other than the Atlas that we modeled for the VRC. In the post-DRC workshop on Sunday, both first-place Team KAIST and third-place Tartan Rescue discussed their use of Gazebo, in particular for developing solutions to fall-recovery and vehicle egress. That’s one of the reasons we work on Gazebo: to give roboticists the tools they need to safely develop robot software to handle unsafe situations, without risk to people or hardware.

Based on our observations at the competition and communications with team members, out of the 23 DRC Finals teams, we count 18 teams using ROS and 14 teams using Gazebo. We couldn’t be happier to see such impact from open source robot software!

Join OSRF at the biggest robotics event of the year, the DARPA Robotics Challenge (DRC) Finals! If you’re looking for some excitement on June 5 and 6, head on over to the Fairplex in Pomona, Calif. for two days of free robotics competition and demonstrations. OSRF will be at the Expo in full force with 4 interactive demos and more stickers than you have surfaces to stick them on.

The DRC Finals will be divided into two parts. The Technology Expo will offer dozens of interactive exhibits and demonstrations, and the competition Grandstands will provide an excellent view as 25 robotics organizations from around the world compete for $3.5 million in prizes.

We’ve worked on the DARPA Robotics Challenge since it kicked off in 2012. In June 2013, we helped host the DARPA Virtual Robotics Challenge which awarded 8 Boston Dynamics Atlas robots to the most successful competitors. In December 2013, we participated in the Expo at the DRC Trials in Florida. It has been thrilling to take part in such an impressive, impactful robotics program, and we can’t wait to witness the grand conclusion in just one week.

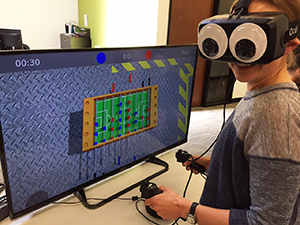

If you’re in the Pomona area next Friday and Saturday, we’d love to see you at the OSRF booth (#14). Just hang a left as you enter the Expo area, and you’ll see us a few booths down on the right side. We’ll have a handful of demos for you to check out, including simulated foosball and drone flying, a motion capture system with haptic feedback, and a bale of Turtlebots you can drive around.

For more information, check out the DRC Finals website: http://www.theroboticschallenge.org/.

Here’s a map of the Expo area:

We’re excited to announce that OSRF and BIT Systems are seeking innovative and revolutionary robotics projects for the Robotics Fast Track (RFT) effort, sponsored by the Defense Advanced Research Projects Agency (DARPA).

The goals of Robotics Fast Track are:

Learn more and apply at rft.osrfoundation.org!

This piece was written as a precursor to the Silicon Valley Robotics panel titled “Women in Robotics: Challenge or Opportunity?” Join us at IDEO’s San Francisco office on Wednesday, April 29 at 7 pm to hear from women in a wide range of robotics roles. Tickets can be purchased here.

Let me get the basic statistics out of the way. It is widely acknowledged and reported that women are grossly underrepresented in the STEM fields. The U.S. Department of Commerce’s Economics and Statistics Administration (ESA) reported in 2011 that women hold less than 25% of the country’s STEM jobs. Women comprise a mere 17% of Google’s technology workforce; Apple sits at 20%. There are many factors at play, from what the ESA describes as the lack of “family-friendly flexibility in the STEM fields,” to gender discrimination and stereotyping, to the shortage of strong female role models.

It is the latter point that I wish to touch on first, because indeed, I found my niche in robotics due in large part to the outstanding mentor I found in Leila Takayama. I met Leila during an internship at Willow Garage, back when I was struggling to apply a psychology degree to a burgeoning interest in badass robots. I began at Willow as an operations intern. I did everything from inventory management, to lunch clean-up, to multimeter measurements on robot stress tests, to light switch labeling. As much as label making satisfies my deepest desire for order, I saw everyone around me designing and building the PR2. At that time, the PR2 was arguably the world’s most sophisticated personal robot. I wanted in on the action. That’s about as far as I got, though – a keen interest in robots – until Leila joined and introduced me to Human-Robot Interaction.

Without a doubt, it was my experience as Leila’s research assistant, her advice and encouragement, and the challenges she emboldened me to take on, that opened my eyes to how I could make a place for myself in the world of robotics. With Leila as my role model and mentor, I conducted HRI research, co-authored a paper, gradually become comfortable surrounded by a nearly all-male engineering team, and shed the sometimes crippling doubt around whether I had what it took to work in a highly technical field. Witnessing Leila successfully navigate this male-dominated industry cemented in me the confidence I needed to pursue a career in robotics, not only as a woman, but as a designer and researcher.

At Leila’s urging, I applied and was accepted to Carnegie Mellon University’s Human-Computer Interaction masters program. This turned out to be the second most influential factor in getting me to where I am now, co-founder and Lead UX Designer at OSRF. I mention this because, while UX researchers and designers are now in high demand at technology companies, that path would have been unknown to me without Leila’s example.

The message here is twofold: find a mentor within robotics, as I did with Leila; and realize there can be more to a STEM career than only engineering. There is enormous opportunity for women and researchers and designers; there are countless ways for women to kick ass in the robotics field. Don’t misunderstand me: be an engineer if that’s where your passion lies, but know that there’s more to robotics than engineering.

As robots become increasingly commonplace in our everyday lives, the need for robotics designers and researchers (and engineers) is growing, opening up vast opportunities to shape how robots function and behave, and how we in turn perceive and interact with them. Just one simple example: What should happen during a robot-human encounter in a narrow hallway? Numerous social norms govern this scenario on how to negotiate passage when only humans are involved. Bringing such an encounter to a satisfactory and natural conclusion when a robot is involved requires thoroughly technical as well as social and user experience expertise. Who knows, maybe having a female perspective in this research means that robots will never be guilty of manslamming.

For robots to weave seamlessly into daily life, we must design and construct them with a keen understanding of the preference differences not just between genders, but within male and female populations as well. Robots need to appear non-threatening yet capable, be able to accommodate personal space preferences, and provide the desired amount of information with the communication style or tone most effective with its current audience. Robots designed and built exclusively by men, or exclusively by women, will fail in some regards to meet the needs of half their users. If we wish for robotic technologies to flourish, it would be foolhardy to accept the STEM fields’ gender ratio status quo.

Robotics is special among technical areas in that the breadth of required specialties is exceptionally broad. Without an understanding of technical, psychological, cognitive, and social factors robotics will stay locked in limited industrial application areas. Such a limitation would be a enormous opportunity lost.

by Brian Gerkey

Our friends at Clearpath Robotics announced today that they’re offering ROS consulting services for enterprise R&D projects. And they’ve committed to giving part of the proceeds to OSRF, to support the continued development and support of ROS!

Our friends at Clearpath Robotics announced today that they’re offering ROS consulting services for enterprise R&D projects. And they’ve committed to giving part of the proceeds to OSRF, to support the continued development and support of ROS!

This service is something that we’ve heard requested many times, especially from our industry users, and we’re excited that Clearpath is going to offer it. If you’re looking for help or advice in using ROS on a current or upcoming project, get in touch with Clearpath.

by Brian Gerkey

![]() As part of the run-up to the DARPA Robotics Challenge Finals in June, check out this piece on the role of tools like Gazebo: “The Value of Open Source Simulation for Robotics Development and Testing.”

As part of the run-up to the DARPA Robotics Challenge Finals in June, check out this piece on the role of tools like Gazebo: “The Value of Open Source Simulation for Robotics Development and Testing.”

Stay tuned for previews of what we’ll be showing off at the DRC Finals Expo…

by Brian Gerkey

We reported last year that our friends at MathWorks had released ROS support for MATLAB. They presented that work in a talk at ROSCon 2014.

We reported last year that our friends at MathWorks had released ROS support for MATLAB. They presented that work in a talk at ROSCon 2014.

That ROS support has now been promoted into an official MATLAB toolbox, called the Robotics System Toolbox. You can work in MATLAB with any ROS-enabled robot, simulator, or log data. Check out their overview video!

by Tully Foote

Accepted students will participate in real-world software development,

contributing to robotics projects like Gazebo, ROS, and Ignition

Transport, and engaging with the global robotics community, all while

getting paid. As a bonus, this year we also offer ROS-Industrial

projects.

Check out our GSoC site and don’t forget to visit our ideas page, which lists projects that we’re interested in. Feel free to ask

questions and propose suggestions at gsoc@localhost. The

student application period starts March 16th. Get ready for a robotics

coding summer!

by Brian Gerkey

We’re pleased to announce that OSRF has joined the Dronecode Project, which promotes open source platforms for Unmanned Aerial Vehicles (UAVs). That mission, plus the burgeoning use of ROS and Gazebo in UAV development, make Dronecode and OSRF natural partners.

We’re pleased to announce that OSRF has joined the Dronecode Project, which promotes open source platforms for Unmanned Aerial Vehicles (UAVs). That mission, plus the burgeoning use of ROS and Gazebo in UAV development, make Dronecode and OSRF natural partners.

We’ll work with Dronecode to make our tools even more useful for UAV projects. We’ll also bring together the general robotics community and the aerial robotics community. Both groups have valuable tools and capabilities which can be shared, to everyone’s benefit.

We look forward to getting more involved with the UAV community and seeing some amazing open source flying robots.

by Brian Gerkey

At the end of January, Baxter left OSRF for a stint in Corvallis, Oregon where he will be used in a project that is investigating the use of teleoperated robots in the treatment of highly contagious diseases such as Ebola. He will be joining the Personal Robotics Group, part of Oregon State University’s growing Robotics Program, as part of their NSF-funded work to bring robots to the front lines of the current Ebola outbreak.

Health care workers are at the highest risk of exposure when working in close proximity to infected patients. Even the use of personal protective equipment still exposes workers to considerable risk of infection. These risks are due to both faulty practice and extreme conditions, especially in harsh locations such as West Africa where high temperatures and humidity present real operational challenges. The use of intuitive teleoperation interfaces will enable health care workers to remotely operate robots (such as Baxter) to perform significant portions of their jobs from a safe distance. Examples of potential tasks include patient monitoring, equipment moving, and contaminated material disposal. This will allow health care workers to provide needed care while maintaining the important patient-health care worker interaction, all while making their jobs safer and more tolerable.

Here is an example of a task that Baxter will help investigate, as performed by the PR2:

by Brian Gerkey

The Gazebo team has been hard at work setting up a simulation environment for the Defense Advanced Research Projects Agency (DARPA)’s Hand Proprioception and Touch Interfaces (HAPTIX) program. The goal of the HAPTIX program is to provide amputees with prosthetic limb systems that feel and function like natural limbs, and to develop next-generation sensorimotor interfaces to drive and receive rich sensory content from these limbs. Managed by Dr. Doug Weber, HAPTIX is being run out of DARPA’s Biological Technologies Office (BTO).

As the organization maintaining Gazebo, OSRF has been tasked with extending Gazebo to simulate prosthetic hands and test environments, and develop both graphical and programming interfaces to the hands. OSRF is officially releasing a new version of Gazebo for use by HAPTIX participants. Highlights of the new release include support for OptiTrack motion capture system; the NVIDIA 3D vision system; numerous teleoperation options including the Razer Hydra, SpaceNavigator, mouse, mixer board and keyboard; a high-dexterity prosthetic arm; and programmatic control of the simulated arm using Linux, Windows and MATLAB. More information and tutorials are available at the Gazebo website. Here’s an overview video:

“Our track record of success in simulation as part of the DARPA Robotics Challenge makes OSRF a natural partner for the HAPTIX program,” according to John Hsu, Chief Scientist at Open Source Robotics Foundation. “Simulation of prosthetic hands and the accompanying GUI will significantly enhance the HAPTIX program’s ability to help restore more natural functionality to wounded service members.”

Gazebo is an open source simulator that makes it possible to rapidly test algorithms, design robots, and perform regression testing using realistic scenarios. Gazebo provides users with a robust physics engine, high-quality graphics, and convenient programmatic and graphical interfaces. Gazebo was the simulation environment for the VRC, the Virtual Robotics Challenge stage of the DARPA Robotics Challenge.

This project also marks the first time Windows and MATLAB users can interact with Gazebo, thanks to our new cross-platform transport library. The scope is limited to the HAPTIX project, however plans are in motion to bring the entire Gazebo package to Windows.

Teams participating on HAPTIX will have access to a customized version of Gazebo that the Johns Hopkins University Applied Physics Laboratory Modular Prosthetic Limb (MPL) developed under the DARPA Revolutionizing Prosthetics program, as well as representative physical therapy objects used in clinical research environments.

More details on HAPTIX can be found in the DARPA announcement.

by Brian Gerkey