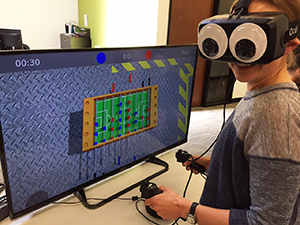

The IEEE Vision, Innovation, and Challenges Summit features 15 world-class speakers who will deep dive into the most crucial areas of technology today, including robotics, genome therapy, mixed reality, geometric processing and animated film, the role of engineers in todays society and more.

Members of the Open Robotics community can save 20% off registration by using the discount code: VICSRobotics.

This year, the Summit will feature a panel entitled: Robots on the Rise, The Future of Robotics and AI, moderated by Stanford University Professor, Oussama Khatib, and joined by panelists Cynthia Breazeal, Associate Prof. at MIT & Founder and Chief Scientist of Jibo, Inc.; Eric Krotkov, COO at Toyota Research Institute; Marc Raibert, Founder and CEO of Boston Dynamics; and Grant Imahara, Consulting Mechanical Designer, Disney Research and Host, Discovery Channel’s MythBusters.

Marc Raibert of Boston Dynamics will be bringing SpotMini – the robot dog

After the days sessions, there is a night of festivities and entertainment with the IEEE Honors Ceremony which celebrates the contributions of some of the greatest minds of our time who have made a lasting impact on society for the benefit of humanity In other words, the Academy Awards for Technologists and Engineers.

Your all access pass includes

● All summit sessions

● Networking breakfast and demos

● Luncheon mixer and networking reception

● Ticket to the Honors Ceremony with 3-course dinner

● Entertainment

Learn more and register at www.ieee-vics.org. This event will sell out so secure your seat early.

We’re pleased to welcome

We’re pleased to welcome

We’re pleased to welcome

We’re pleased to welcome  We’re pleased to welcome

We’re pleased to welcome  We’re happy to welcome

We’re happy to welcome

We

We  We’re pleased to announce that OSRF has joined the

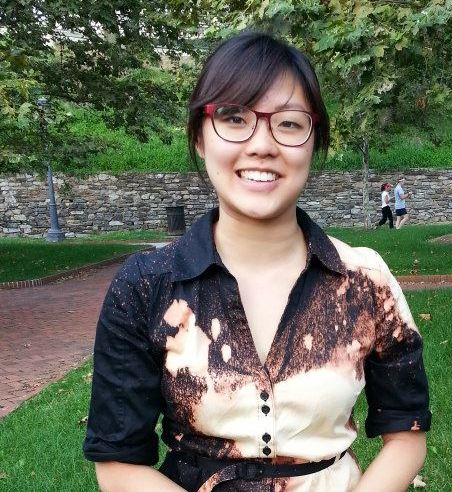

We’re pleased to announce that OSRF has joined the  OSRF is pleased to welcome

OSRF is pleased to welcome  OSRF is pleased to welcome

OSRF is pleased to welcome  Toward the end of last year, we

Toward the end of last year, we  Ubuntu

Ubuntu  We’ve supported and relied on Ubuntu Linux since the beginning of the ROS project, and we’re excited to be part of this transition to a new Ubuntu-based app ecosystem.

We’ve supported and relied on Ubuntu Linux since the beginning of the ROS project, and we’re excited to be part of this transition to a new Ubuntu-based app ecosystem.

OSRF is pleased to welcome

OSRF is pleased to welcome